Neural Style Transfer : Generating Lyrics using Deep Residual RNNs

This post introduces a new library I have developed called Lyrics Genius. It allows users to generate song lyrics as if they were composed by a certain singer or group. Lyrics Genius is available on github. Link to repository.

Lyrics Genius is built on top of Keras and has the following features:

-

Blazingly fast training 🔥: Lyrics Genius uses a state of the art neural network architecture that uses skip-connections to improve the quality of the results and accelerate training

-

Works on any dataset 📝: Train on any text corpus. Thanks to its character-level encoding neural network, you may train the network on songs or poems of any language and style or size. Works great on small datasets too!

-

Autocomplete feature ✏️: Start writing text and Lyrics Genius will auto-complete the rest with the provided style.

Contents

Obtaining the Data

In order to collect a significant lyrics dataset, I had to find a lyrics repository. For this reason I developed a web scraper that collects lyrics by artist name from the webpage lyrics.com.

Using the scraper allows to consolidate all lyrics from a band or artist into a single txt file which we can then use to train our model.

For example, for the band Red Hot Chilli Peppers, 1002 lyrics were found. However, some of these are duplicated as a song might appear in multiple albums. Furthermore, the text might be incorrectly formatted.

Due to these reasons, data cleaning is performed to collect the lyrics and consolidate them in a uniform dataset. From the previous example, a total of 302 song lyrics are left after cleaning the data.

The Pipeline

Lyrics Genius’ architecture takes inspiration from the char-rnn network from Andrej Karpathy which I discovered after taking Andrew Ng’s Deep Learning Specialization on Coursera.

A few improvements have been made to char-rnn in order to increase the style transfer and the training speed:

- Character embedding model that constructs vectors based on the text characters n-grams.

- 1-Dimensional Spatial Dropout layer to drop entire 1-D feature maps (along all channels).

- Residual Bidirectional LSTM units to provide an additional shortcut from the lower layers.

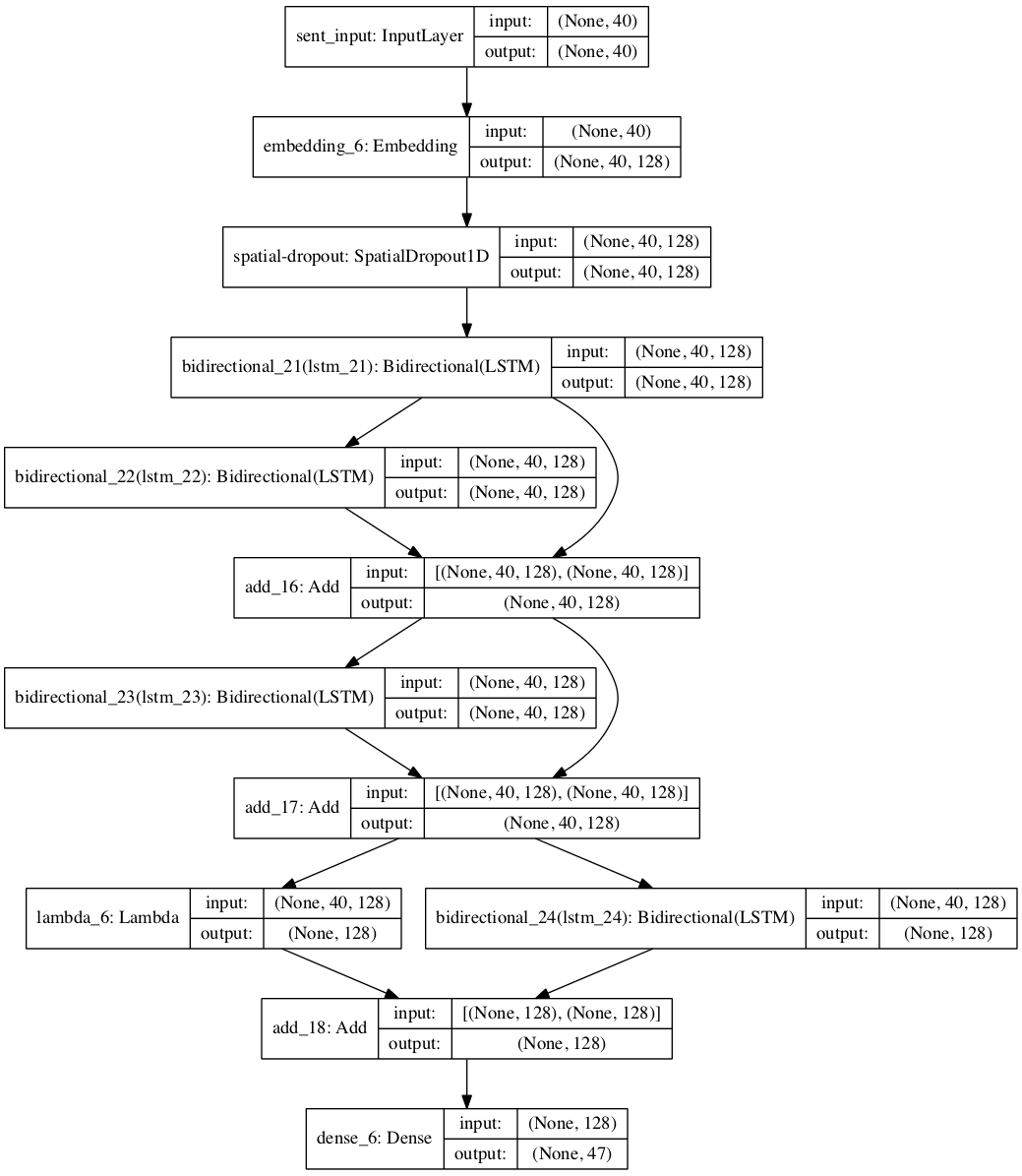

The previous diagram shows the architecture of a network generated by Lyrics Genius with 4 Residual LSTM Layers. Text is scanned using a character window size of 40 (input size) and the output is a single char (in this case our text corpus contains 47 distinct characters).

The network is divided in the following components:

- An input layer that takes a window of the text of size Tx. In this specific case, Tx is 40.

- The input is projected to embedding vectors. In this case, the dimension of these vectors is 128.

- A 1-Dimensional Spatial Dropout layer is added to provide regularization.

- 4 Layers of Residual Bidirectional LSTMs are added. In our example, each with 64 units for the forward and backwards states, taking the total of units per LSTM layer to 128.

- Finally, a Dense layer to predict which character will appear next. In our example, the dataset used as input contained 47 unique characters. Therefore, there will be 47 dense units with a softmax activation function.

Results

After training the network on several dataset, I have collected a set of interesting results obtained from the model.

The following results were all obtained with the network configuration presented in the previous chapter. Furthermore, 10 epochs were used to train all the examples. In order to obtain better results one would need to increase the number of epochs. This configuration was chosen to show the reader that with little training, the results obtained are already acceptable.

The Red Hot Chilli Peppers

- Input: Giving meaning

Generated Text:

Giving meaning to the sun

i’ll got to go the sun is a like the bright on the brother pace the sun

the brother the brother start

i can’t be a like me and i’ll be a like the brother baby i want to go to go

i cause i’ll see you want to go

i know i’ll see it away give it away give it away give it away now

give it away give it away give it away now

Gorillaz

- Input: Gravity on me

gravity on me (show many people ready to rock the house?)

désolé

désolé

désolé

(how many people ready to rock the house?)

come on the day

but i got a hold it down the days the back it all me down

when you’re start the start and decire

i am in the beat you don’t played it down on the dj spin

i want y’all to just get down

now you got to just get down

now the m-c rhymin’ and the dj cuttin’

Kendrick Lamar

- Input: *The sun *

Generated Text:

The sun is shining on the start life and we gon’ it out of a bitch

i know the shit, you see the streets of a life

and i got a man, the streets on the streets and i see that’s the problem

i got me say i gotta be work the streets to the come and i don’t know the

problem and make you say the starts and the streets and we gotta streets and i

gotta know it bitch the start is the streets and i stay it on the way i gotta be

Shakespeare

- Input: *Look in thy glass and tell

Generated Text:

Look in thy glass and tell:

he what thou hath i self that the surves worth which,

that with time that my self love to me,

that who waker thine breast me conted good,

thy beauty the conter beauty’s fast my love,

and not to be that my love in shall my self your will,

that which i not the surmer be that my sand

Conclusion

Lyrics Genius provides a fast method to perform style transfer from text datasets. Whether you want to write lyrics, poems or generate post titles for your online forum, LyricsGenius provides an easy way to obtain a quick response by providing a text corpus.

For more information on how to use Lyrics Genius, visit the project’s github repository. Link to repository.

References

-

char-rnn: Github Repository

-

Residual LSTM: paper

-

Deep Learning specialization Coursera Course